Training Deep Neural Networks on a GPU with PyTorch

Part 4 of "PyTorch: Zero to GANs"

This post is the fourth in a series of tutorials on building deep learning models with PyTorch, an open source neural networks library. Check out the full series:

- PyTorch Basics: Tensors & Gradients

- Linear Regression & Gradient Descent

- Image Classfication using Logistic Regression

- Training Deep Neural Networks on a GPU

- Coming soon.. (CNNs, RNNs, GANs etc.)

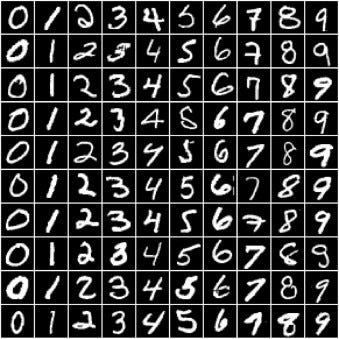

In the previous tutorial, we trained a logistic regression model to identify handwritten digits from the MNIST dataset with an accuracy of around 86%.

However, we also noticed that it's quite difficult to improve the accuracy beyond 87%, due to the limited power of the model. In this post, we'll try to improve upon it using a feedforward neural network.

System Setup

If you want to follow along and run the code as you read, you can clone this notebook, install the required dependencies using conda, and start Jupyter by running the following commands on the terminal:

pip install jovian --upgrade # Install the jovian library

jovian clone fdaae0bf32cf4917a931ac415a5c31b0 # Download notebook

cd 04-feedforward-nn # Enter the created directory

jovian install # Install the dependencies

conda activate 04-feedfoward-nn # Activate virtual env

jupyter notebook # Start Jupyter

On older versions of conda, you might need to run source activate 04-feedfoward-nn to activate the virtual environment. For a more detailed explanation of the above steps, check out the System setup section in the first notebook.

Preparing the Data

The data preparation is identical to the previous tutorial. We begin by importing the required modules & classes.

import torch

import numpy as np

import torchvision

from torchvision.datasets import MNIST

from torchvision.transforms import ToTensor

from torch.utils.data.sampler import SubsetRandomSampler

from torch.utils.data.dataloader import DataLoaderWe download the data and create a PyTorch dataset using the MNIST class from torchvision.datasets.