PyTorch Basics: Tensors & Gradients

Part 1 of "Pytorch: Zero to GANs"

This post is the first in a series of tutorials on building deep learning models with PyTorch, an open source neural networks library developed and maintained by Facebook. Check out the full series:

- PyTorch Basics: Tensors & Gradients

- Linear Regression & Gradient Descent

- Image Classfication using Logistic Regression

- Training Deep Neural Networks on a GPU

- Coming soon.. (CNNs, RNNs, GANs etc.)

This series attempts to make PyTorch a bit more approachable for people starting out with deep learning and neural networks. In this post, weâll cover the basic building blocks of PyTorch models: tensors and gradients.

System setup

This tutorial takes a code-first approach towards learning PyTorch, and you should try to follow along by running and experimenting with the code yourself. We'll use the Anaconda distribution of Python to install libraries and manage virtual environments. For interactive coding and experimentation, we'll use Jupyter notebooks. All the tutorials in this series are available as Jupyter notebooks hosted on Jovian: a sharing and collaboration platform for Jupyter.

Jovian makes it easy to share Jupyter notebooks on the cloud by running a single command directly within Jupyter. It also captures the Python environment and libraries required to run your notebook, so anyone (including you) can reproduce your work.

Here's what you need to do to get started:

-

Install Anaconda by following the instructions given here. You might also need to add Anaconda binaries to your system PATH to be able to run the

condacommand line tool. -

Install the

jovianPython library by the running the following command (without the$) on your Mac/Linux terminal or Windows command prompt:

pip install jovian --upgrade

- Download the notebook for this tutorial using the

jovian clonecommand:

$ jovian clone <notebook_id>

(You can get the notebook_id by clicking the 'Clone' button at the top of this page on https://jvn.io)

Running the clone command creates a directory 01-pytorch-basics containing a Jupyter notebook and an Anaconda environment file.

$ ls 01-pytorch-basics

01-pytorch-basics.ipynb environment.yml

- Now we can enter the directory and install the required Python libraries (Jupyter, PyTorch etc.) with a single command using

jovian:

$ cd 01-pytorch-basics

$ jovian install

jovian reads the environment.yml file, identifies the right dependencies for your operating system, creates a virtual environment with the given name (01-pytorch-basics by default) and installs all the required libraries inside the environment, to avoid modifying your system-wide installation of Python. It uses conda internally. If you face issues with jovian install, try running conda env update instead.

- We can activate the virtual environment by running

$ conda activate 01-pytorch-basics

For older installations of conda, you might need to run the command: source activate 01-pytorch-basics.

- Once the virtual environment is active, we can start Jupyter by running

$ jupyter notebook

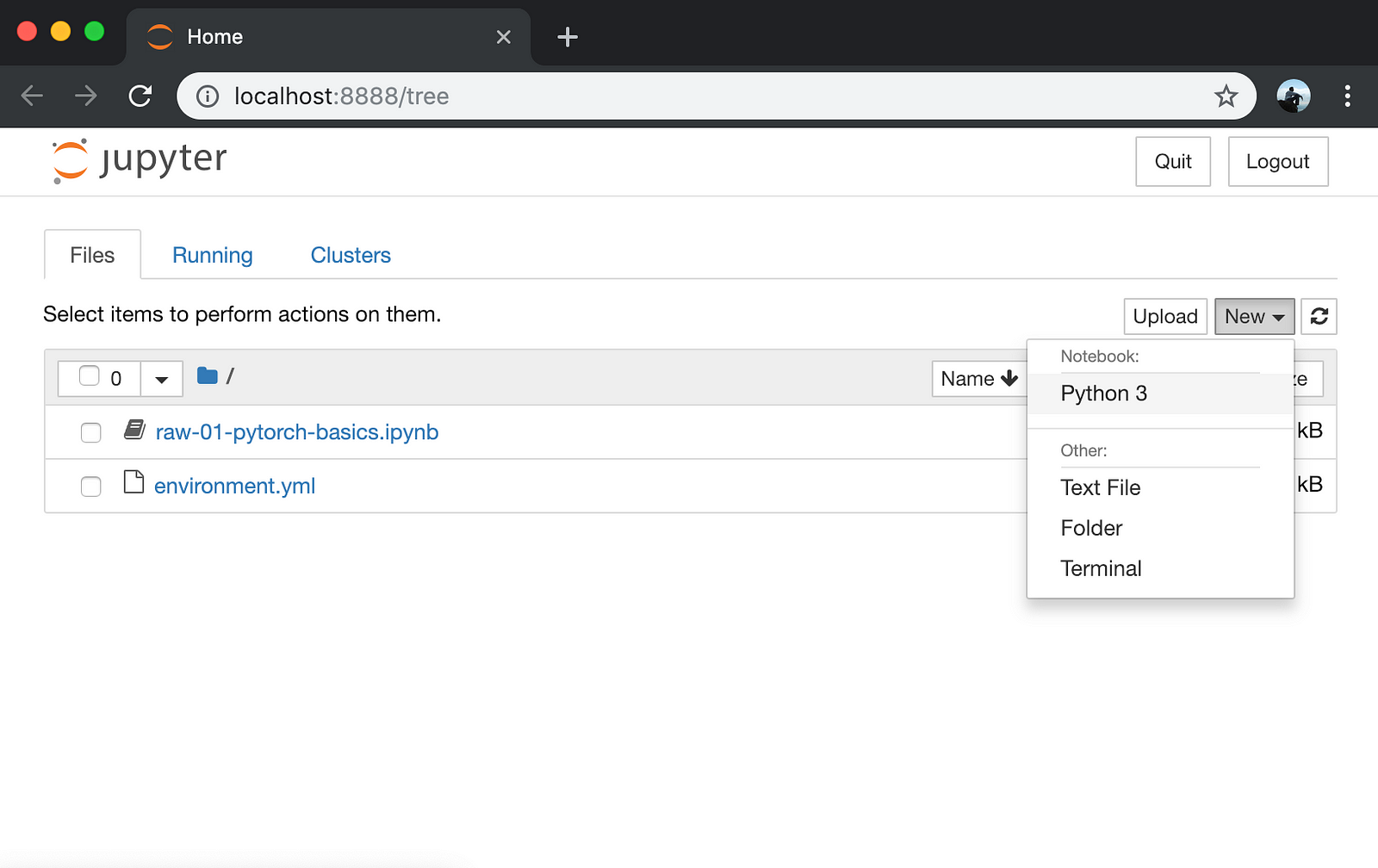

- You can now access Jupyter's web interface by clicking the link that shows up on the terminal or by visiting http://localhost:8888 on your browser.

At this point, you can click on the notebook 01-pytorch-basics.ipynb to open it and run the code. If you want to type out the code yourself, you can also create a new notebook using the 'New' button.

We begin by importing PyTorch:

import torchTensors

At its core, PyTorch is a library for processing tensors. A tensor is a number, vector, matrix or any n-dimensional array. Let's create a tensor with a single number: