This notebook requires Python >= 3.6 and fastai==1.0.52

Objective

In this notebook we will finetune pre-trained BERT model on The Microsoft Research Paraphrase Corpus (MRPC). MRPC is a paraphrase identification dataset, where systems aim to identify if two sentences are paraphrases of each other.

BERT

Overview:

- Trained on BookCorpus and English Wikipedia (800M and 2,500M words respectively).

- Training time approx. about a week using 64 GPUs.

- State-Of-The-Art (SOTA) results on SQuAD v1.1 and all 9 GLUE benchmark tasks.

Architecture:

Embedding Layers

-

Token embeddings, Segment embeddings and Position embeddings.

-

[SEP] token to mark the end of a sentence.

-

[CLS] token at the beginning of the input sequence, to be used only if classifying.

-

The convention in BERT is:

(a) For sequence pairs:

tokens: [CLS] is this jack ##son ##ville ? [SEP] no it is not . [SEP]

type_ids: 0 0 0 0 0 0 0 0 1 1 1 1 1 1

(b) For single sequences:

tokens: [CLS] the dog is hairy . [SEP]

type_ids: 0 0 0 0 0 0 0

Where "type_ids" are used to indicate whether this is the first

sequence or the second sequence. The embedding vectors for type=0 and

type=1 were learned during pre-training and are added to the wordpiece

embedding vector (and position vector). This is not strictly necessary

since the [SEP] token unambigiously separates the sequences, but it makes

it easier for the model to learn the concept of sequences.

- For classification tasks, the first vector (corresponding to [CLS]) is

used as as the "sentence vector". The first token of every sequence is always the special classification embedding([CLS]). The final hidden state (i.e., out-put of Transformer) corresponding to this token is used as the aggregate sequence rep-resentation for classification tasks. For non-classification tasks, this vector is ignored.

Note that this only makes sense because the entire model is fine-tuned.

BERT is bidirectional, the [CLS] is encoded including all representative information of all tokens through the multi-layer encoding procedure. The representation of [CLS] is individual in different sentences. [CLS] is later fine-tuned on the downstream task. Only after fine-tuning, [CLS] aka the first token can be a meaningful representation of the whole sentence. Link

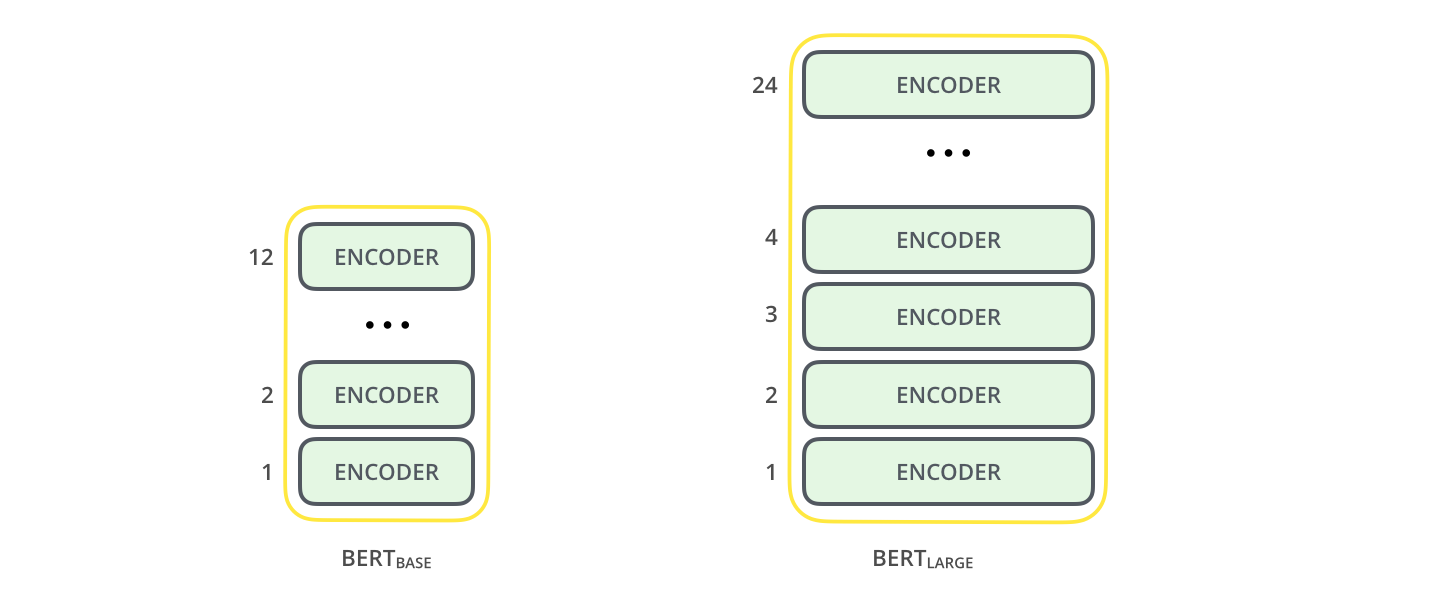

Encoders

BERT BASE (12 encoders) and BERT LARGE (24 encoders)

Training

-

Masked LM (MLM): Before feeding word sequences into BERT, 15% of the words in each sequence are replaced with a [MASK] token.

Training the language model in BERT is done by predicting 15% of the tokens in the input, that were randomly picked.

These tokens are pre-processed as follows — 80% are replaced with a “[MASK]” token, 10% with a random word, and 10% use the original word. The model then attempts to predict the original value of the masked words, based on the context provided by the other, non-masked, words in the sequence.

-

Next Sentence Prediction (NSP): In the BERT training process, the model receives pairs of sentences as input and learns to predict if the second sentence in the pair is the subsequent sentence in the original document.

Pre-trained Model

We will use op-for-op PyTorch reimplementation of Google's BERT model provided by pytorch-pretrained-BERT library. Refer the Github repo for more info: https://github.com/huggingface/pytorch-pretrained-BERT