Image Classification using CNNs and ResNets in PyTorch

Part 5 of "PyTorch: Zero to GANs"

This post is the fifth in a series of tutorials on building deep learning models with PyTorch, an open source neural networks library. Check out the full series:

- PyTorch Basics: Tensors & Gradients

- Linear Regression & Gradient Descent

- Image Classfication using Logistic Regression

- Training Deep Neural Networks on a GPU

- Image Classification using CNNs (this notebook)

- Generative Adverserial Networks (GANs)

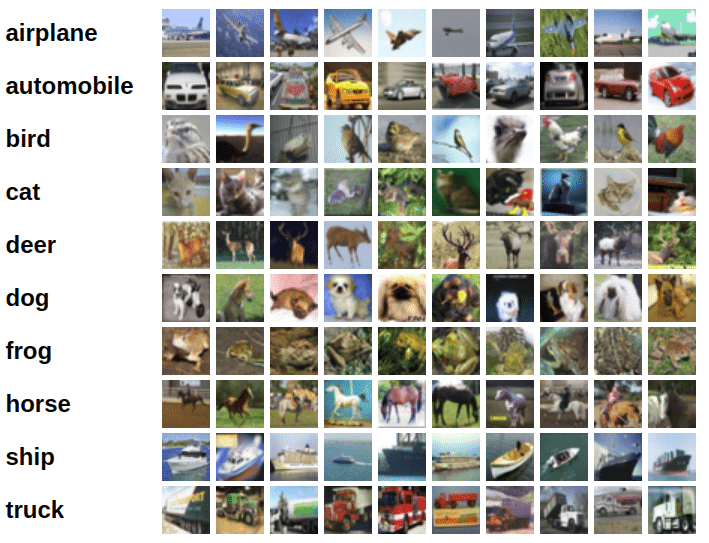

In the previous tutorial, we trained a feedfoward neural networks with a single hidden layer to classify handwritten digits from the MNIST dataset with over 97% accuracy. For this tutorial, we'll use the CIFAR10 dataset, which consists of 60000 32x32 px colour images in 10 classes. Here are some sample images from the dataset:

System Setup

This notebook is hosted on Jovian.ml, a platform for sharing data science projects. If you want to follow along and run the code as you read, you can choose the "Run on Kaggle" option from the "Run" dropdown above. Otherwise, to run the code on your machine, you can clone the notebook, install the required dependencies using conda, and start Juptyer by running the following commands:

pip install jovian --upgrade # Install the jovian library

jovian clone 05-cifar10-cnn # Download notebook & dependencies

cd 05-cifar10-cnn # Enter the created directory

conda env update # Install the dependencies

conda activate 05-cifar10-cnn # Activate virtual env

jupyter notebook # Start Jupyter

On older versions of conda, you might need to run source activate 05-cifar10-cnn to activate the environment. For a more detailed explanation of the above steps, check out the System setup section in the first notebook.

Before you start executing the code below, you may want to clear the cell outputs by selecting "Kernel > Restart and Clear Output" from the Jupyter notebook menu bar, to avoid confusion.

Exploring the Data

We'll download the images in PNG format from this page, using some helper functions from the torchvision and tarfile packages.

import os

import torch

import torchvision

import tarfile

from torchvision.datasets.utils import download_url